You may have read a lot about teenagers comitting self-harm after interactions with AI. OpenAI’s ChatGPT has been the primary AI chatbot in most of these cases. There has been a lot of discussion and criticism around this. Most of the onus has been placed on AI companies like OpenAI. In this article, I am not going to propose a technology solution. I aim to challenge the fact that we are primarily blaming OpenAI for these instances of self-harm.

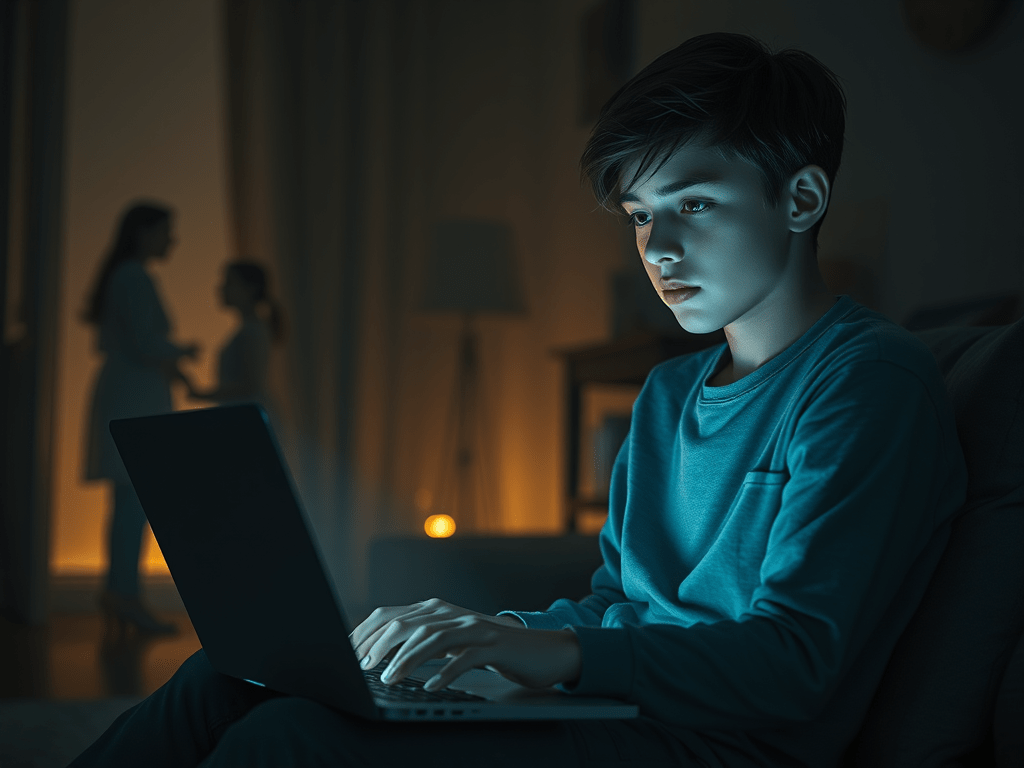

There is no doubt that teenagers do not have the maturity to make the best decisions for themselves. They are vulnerable emotionally. No one can understand this fact better than the father of a special needs teenager. But let us probe the primary problem here. The primary problem, that eventually leads to catastrophe, is that the teenagers in question were emotionally dependent on AI to have meaningful conversations.

These kids should have felt emotionally connected with their families to be able to have such conversations. Imagine the lack of emotional support to the extent that, despite knowing that there is no real person on the other end, they had to pour their heart out to a chatbot.

Are we trying to run away from our own responsibilities by blaming AI for a problem caused by humans?

I am personally experienced with family shying away from responsibilities my whole life. I saw my grandfather die alone in a rented one-room, with no family member around, in the same city where my parents were living in a mansion. A mansion they were able to build because of jobs that my grandfather helped them get.

I have seen my parents run away from parental responsibilities and abandon me, my wife, and my son when we were in a financial scenario where my son had to wear glued shoes to school, and we asked them for financial help. Emotional abandonment is real.

Those who are mentally strong can handle emotional abandonment with maturity. For the mentally vulnerable, like many teenagers, not being able to connect with someone emotionally can almost feel like emotional abandonment. And that is when they turn to sources like AI for emotional support.

I am not trying to shame the parents of the kids who lost their lives. But there is a critical question that everyone needs to ask-Why did these kids feel the need to become reliant on software for emotional support?

As humans, we tend to run away from our responsibilities often. We, as parents, are responsible for the overall well-being of our kids. If we take responsibility and put everything into that responsibility, our kids will be emotionally vulnerable in front of us, not in front of an AI.

If we are not spending enough time connecting with our kids to a level where they can pour their heart out in front of us, how can we expect the responsibility to lie on the shoulders of an algorithm that sits on remote servers?

We harp so much on Responsible AI, but the fact is that if we can be more responsible as humans, many of our worries regarding Responsible AI will be addressed.