Spatial awareness improvements like this one lead not just to better manipulation (pick/place) but to more natural interactions with humans, more reliable behavior in dynamic scenes, and overall safer robotic operation. Came across an interesting paper in this domain. https://lnkd.in/gtpjEPiz

What is the improvement here?

Researchers created a new training dataset called RoboSpatial, comprising over 1 million real-world indoor & tabletop images, thousands of detailed 3D scans, and ~3 million labels encoding rich spatial information (e.g., object-relations, distances, placements).

The dataset pairs 2-D egocentric images with corresponding 3-D scans of the same scene, enabling robot vision models to couple flat-image perception with 3D geometry awareness.

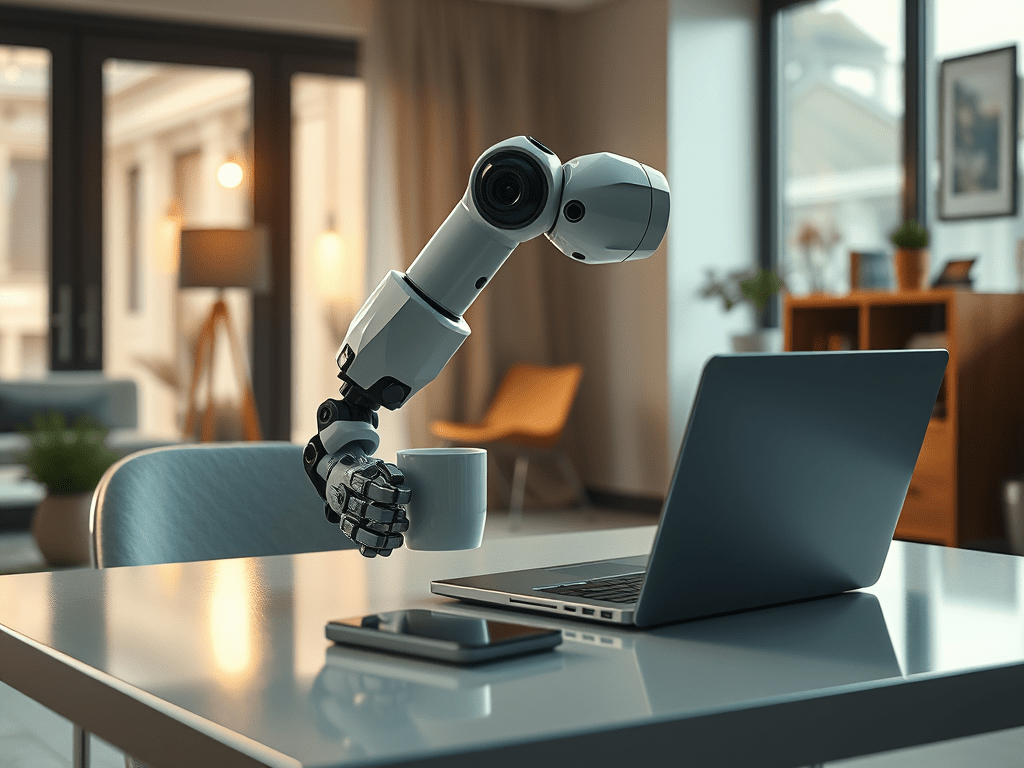

Using this dataset, robots (including a test on an assistive arm, the Kinova Jaco) showed improved spatial reasoning: they could answer questions like “is the mug to the left of the laptop?” or “can the chair be placed in front of the table?” more reliably.

Many robotic-vision systems excel at recognizing objects, but often lack spatial contextual reasoning (e.g., “where exactly is this object relative to me or other objects?”). This gap limits deployment in real-world dynamic environments.

By explicitly training on spatial labels and combining 2D/3D data, RoboSpatial helps build models that understand placement, relation, accessibility, and affordances (i.e., can this object be placed there, will it fit, is it accessible?).

The dataset becomes a foundation for general-purpose robotic perception, helping move beyond narrow tasks to more flexible, environment-aware automation.

Assistive robotics (helping individuals with disabilities), home service robots, warehouse robots, human-robot collaboration, and any domain requiring fine spatial reasoning in unstructured spaces.

Improved Spatial Awareness In Robotics