Few months ago, I wrote an article, Federated Learning in Industry 4.0, touching upon why federated Learning can play a critical role in Industry 4.0. As you can assume, I have been digging deeper and deeper into that for the last few weeks. During that journey, I came across a plethora of research papers specifically focused on applications of federated learning for smart manufacturing, like the paper Federated Learning for Predictive Maintenance and Quality Inspection in Industrial Applications.

But why do you need federated learning for predictive maintenance, you ask? This example will help. You manufacture excavation equipment, those giant ones. A few years ago, you had instances of early lifecycle failures that should not have happened. These failures caused your clients to lose millions of dollars in revenue. You obviously want to embed an early detection system in your equipment so that the customers proactively get a warning for maintenance.

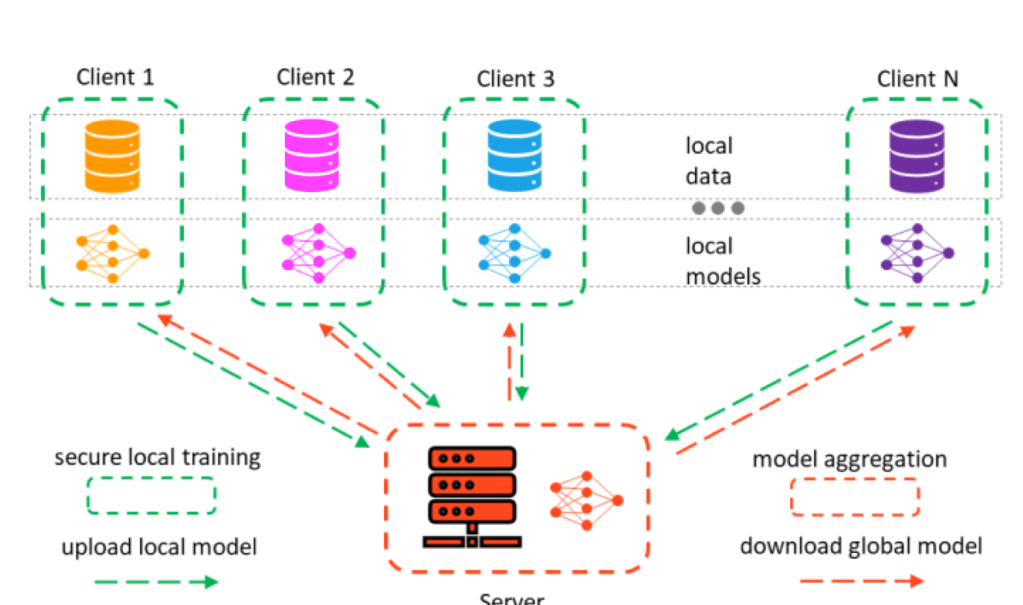

The challenge that you have, though, is that you do not have direct access to the equipment operating data since customer companies consider this data confidential. But you need something to build that early failure warning system. You can collaborate with your customers to establish client models at their end that share only necessary parameters with the central server and model. An example Federated learning structure for this specific scenario is shown below:

Figure 1: Illustration of federated learning

Source: Federated Learning for Predictive Maintenance and Quality Inspection in Industrial Applications.

Bullwhip and CPFR

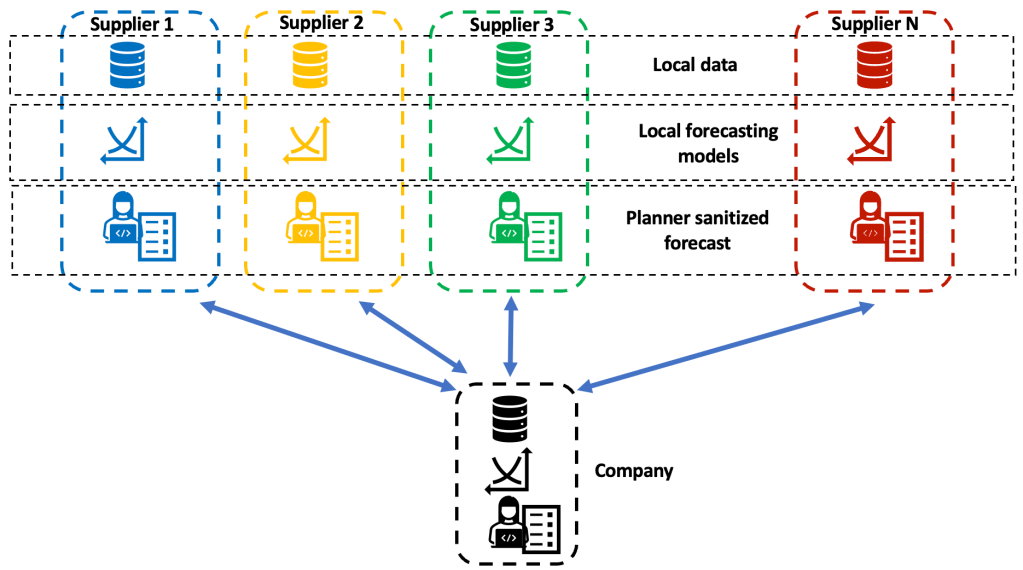

I took a few minutes break during my coding session to relish my morning tea. As I was doing that, I looked at the illustration in Figure 1. That is when it kind of hit me! Isn’t this similar to the architecture we currently leverage for CPFR, but with limited success? Look at Figure 2, which captures a typical CPFR arrangement.

Figure 2: A typical CPFR arrangement

Just by looking at the high-level overview in Figure 2, you can see similarities in architecture, and hence a probable use case.

For those unfamiliar with CPFR, the short candid version is that “Collaborative Planning and Forecasting” is an approach leveraged predominantly in the consumer goods industry, intended to provide shared forecasts across a supply chain so that aspects like the bullwhip effect can be minimized. I say “intended to” because CPFR never really took off, though few may admit it openly.

Behind the lackluster performance of CPFR was not technology but human behavior. And to be more specific, trust and prioritization. Most companies do not trust sharing a granular forecast with an external entity. They can (and they do) sanitize it. Now, the forecast can be sanitized to still be helpful for CPFR, but that will take effort. Planners and supply chain professionals will always prioritize putting that effort into tasks directly impacting their day-to-day work. The result is that data shared in the CPFR process is mostly crap. And this is where the federated architecture can help.

Federated learning for CPFR

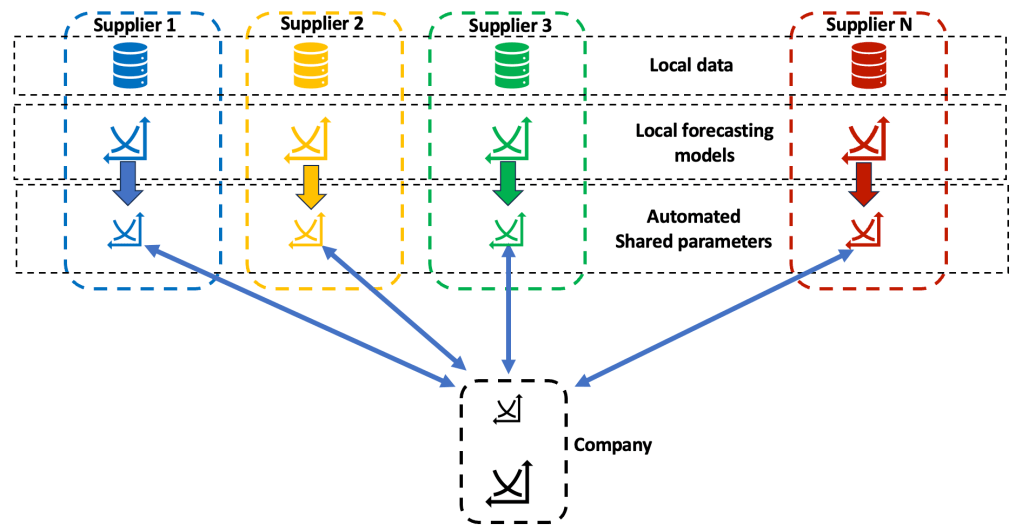

While federated learning gets its name from the fact that it is an architecture specifically for deep learning, the underlying concept here can be extrapolated, even in scenarios where deep learning is not leveraged. Take a look at Figure 3, for example.

Figure 3: Extrapolating federated architecture to CPFR

The architecture above does not assume any deep learning models. However, it borrows the core concept of federated learning, where local models share specific data/parameters with a centralized entity, in this case, the consumer goods company. But there is one critical aspect here. Trust still remains an issue.

The automated parameters sharing aspect takes a human planner out of the loop. However, the model development, specifically the model that extracts parameters to share from the full forecast, still needs to overcome some trust aspects to implement this at key suppliers. The suppliers will still need to know what data or parameters these models will send to the central server at the company. They need to be provided complete visibility into the architecture so that they trust that nothing that they do not want to be shared or that can not be shared is being shared with the company.

Many details need to be taken care of to fine-tune this. But the idea is that an approach like this can help companies leverage real and good data for collaborative planning, establish actual visibility across the supply chain, and mitigate the bullwhip effect significantly.