Deep reinforcement learning (DRL) is an artificial intelligence approach that pertains to developing programs that can solve problems that require intelligence. Intelligence is something that we are obsessed with replicating outside our brains. In fact we associate the term artificial intelligence with this capability, and strive to attain Artificial General Intelligence (AGI) at some point. While DRL might still not be what we can call true AGI on its own, but it does mimic some form of intelligence. Specifically the decision making intelligence used by many species.

The distinguishing property of DRL programs is that they can learn through trial and error from feedback which is sequential, evaluative and sampled through nonlinear function approximations. If all of this seems too technical at this point, don’t panic! The purpose of this article series is to demystify deep reinforcement learning, one of the most powerful approaches in artificial intelligence that leverages deep learning, and combined with other approaches, can help humans plan businesses, specifically operational and tactical planning, with zero defects.

The goal of DRL is to build intelligent machines that learn through feedback. Remember that this is how the most intelligent species on the planet learn as well. In fact, even species or life forms which are not so intelligent learn through feedback. Feedback, in some forms, is what propels evolution. If you believe in the theory of evolution, it is driven by evolving based on feedback from the environment and ecosystem in which the organism lives. While biological innovation is slow, extremely slow in many cases, the gist still is that feedback is tied to intelligence based evolution. Feedback is the crux of DRL as well.

In this article series we will cover six key traits of deep reinforcement learning that will allow you to understand what this technology is and the areas in which it can be leveraged. Specifically we will cover the following key areas:

- The reinforcement learning cycle

- Trial and error learning

- Sequential feedback

- Exploration versus exploitation

- Sampled feedback

- Nonlinear functional approximations

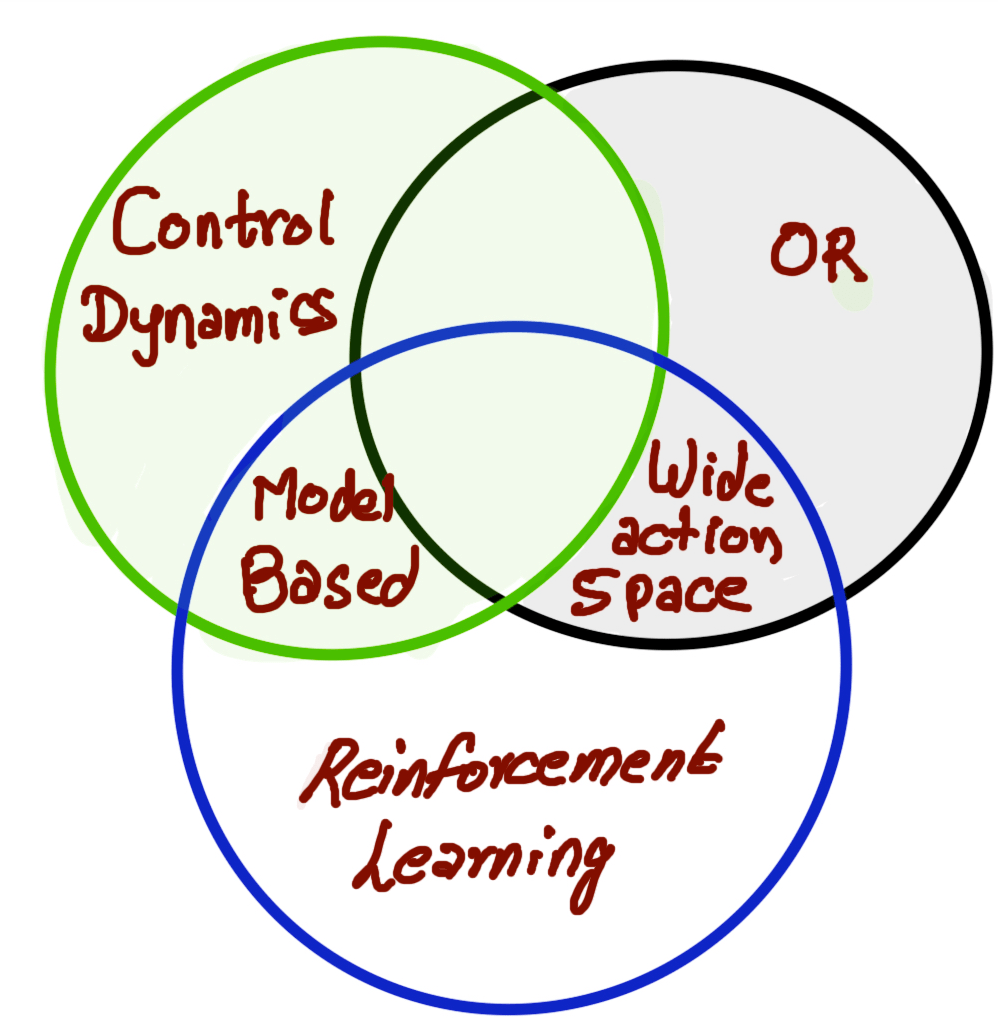

Deep reinforcement learning is actually combination or interception of many different subjects. Remember that behind all the jargon, DRL is an algorithm that assists with solving complex sequential decision making problems . And it takes its inspiration from many different fields.

If you studied electrical or electronics engineering like me, the chances are that you took courses in control theory. Remember that in control theory the system dynamics are generally known in advance. This is why often these courses are titled as control dynamics.

On the other hand, operations research also studies decision making but under uncertainty and the problems that this field solves often have much larger action space than those we encounter in DRL. Let’s switch gears to a non-technical subject of psychology. Psychology studies human behavior which also pertains to studying the complex sequential decision making under uncertainty.

As you can see from the illustration above, deep reinforcement learning takes inspiration from many fields and hence borrows the synergy from fields that may seem starkly different but are in some sense addressing the challenge of decision making. While this synergy is powerful, it also introduces inconsistencies in terminologies, notations and what not. Though in this article series we will focus mostly on the technical side of DRL we have to keep in mind that for understanding the technical aspects as well, we can always tap into examples from these different fields that we have discussed.

In the second part of this article, we will start discussing fundamental concepts of deep learning like agent environments, states and observations. The second part will be published on July 25th .