There was a story in WSJ today where one of its messages was a question- “If AI has transformative powers, why is it not able to solve problems like world hunger or war”? You can read the story using the link below. I don’t think you need a subscription for this specific article.

I think AI can definitely help reduce hunger and the probability of war. It can not solve problems that can not be formulated into a mathematical problem in the backend. So, for that matter, it can not solve any problem, whether it is socioeconomic or business processes, where the underlying cause is beyond the realm of pure math. Where the underlying issues are driven by bad processes and undesirable human behavior.

And this is also a good reminder that we need to probably reset the expectations on AI. We need to understand that AI can provide valuable tools and, in many cases, tackle the problem on its own. But then the problem needs to be one that can be translated into pure math in the backend. An example is facial recognition. AI can nail that (mostly).

But if you want AI to predict what a person in a live image, with a neutral facial expression will do next, it will struggle. It can definitely spit some response but that will in no way be reliable. Unless there are other indicators in the image that the algorithm can leverage. But the gist here is that the prediction of this nature goes beyond pure math.

Let me be blunt here. Let us assume that a perishable goods company needs to tackle its spoilage problem. Currently 95% of its products perish due to few different reasons. A deep learning algorithm can be designed to analyze months or years of data to understand the key drivers. But take my word- even after most of those drivers are tackled, there will never be zero spoilage. The AI algorithm will never be able to stop the spoilage. For example, AI can’t force pickers and putaway labor from not handling the perishables correctly in warehouses, which may lead to damages, leading them to perish early.

Hence, when it comes to issues like reducing world hunger, AI can’t stop world hunger. You may have read that ChatGPT started spitting gibberish (though temporarily) recently. It couldn’t even stop itself from acting weird. And if you understood that it is essentially a mathematical model in the backend, you will be kind towards such weird temporary failures.

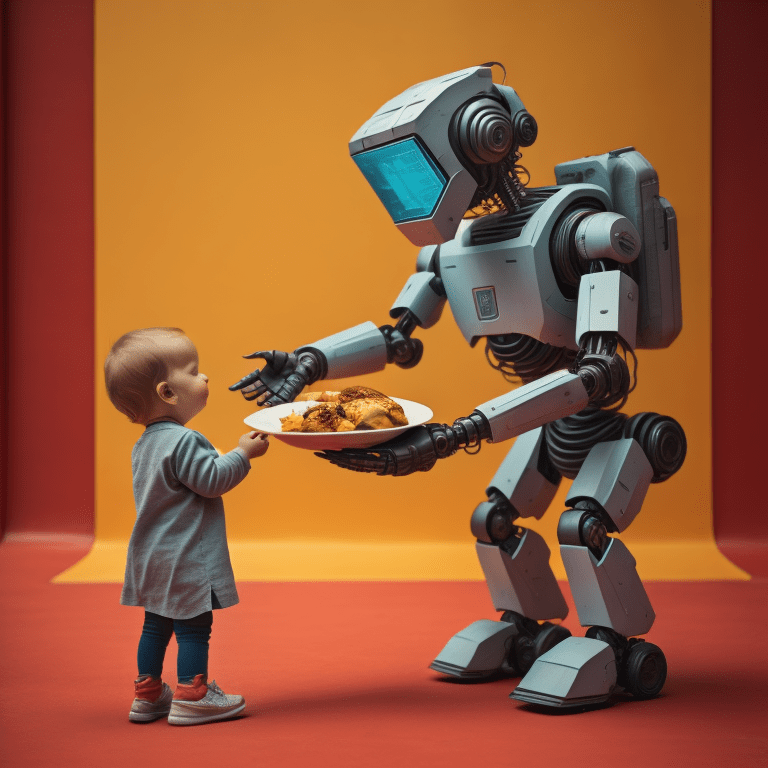

An algorithm that can not ensure its own consistent perfection can certainly not stop world hunger. Despite having the word “Intelligence,” AI algorithms currently do not have their own intelligence, and it is upon humans to leverage tools like AI for noble causes.

But can we put AI to use to at least minimize wars and reduce world hunger? We can do that. For example, we can leverage AI to find the drivers behind hunger problems in geographies around the world. But none of those insights are unknown to humans. A similar reasoning goes for wars. AI can not change the mind of that one single dictator who does not listen to sane reasoning. AI can provide reasoning and negotiation strategies, but a sensible leader is needed to comprehend that.

This same mentality of “AI Magic” is somehow percolating into the corporate world, where we believe AI can do magic in areas that have been consistently plaguing us. In many of these areas, some form of solution existed that could have tackled the issue before the current AI frenzy (though AI solutions may do a better job). But not by itself. Many problems persist because of bad processes and undesirable human behavior. Technology alone can do nothing.

Unless, the Neuralink transplant starts tweaking the way we think. 😁