This article is the second part of a series of three articles. The first part can be read here.

There is no doubt that the cloud approach of building AI capabilities was a transformative leap from decades of legacy approach that constrained organizations from innovating and experimenting. The cloud AI approach, as discussed in the first part of the article, did had some drawbacks. These drawbacks become much more prominent in supply chains, where several nodes are generating and sending data to a central, cloud based hub. In addition to the challenges highlighted in the last article, scaling is also a challenge. As you build more advanced algorithms, the practicality of one central algorithm doing all the number crunching becomes less attractive. And this is where Edge AI came into picture to address many of these drawbacks.

The advantage of the Edge AI model is that you move the decision-making to the edge, essentially close to where the action is, on the floor, leveraging distributed cloud architecture. The article intends to focus on the next stage of evolution, Distributed AI, in the last and final part. However, Edge AI can help build capabilities beyond what many organizations currently have. Most organizations have barely scratched the surface with Edge AI.

The Edge AI Approach

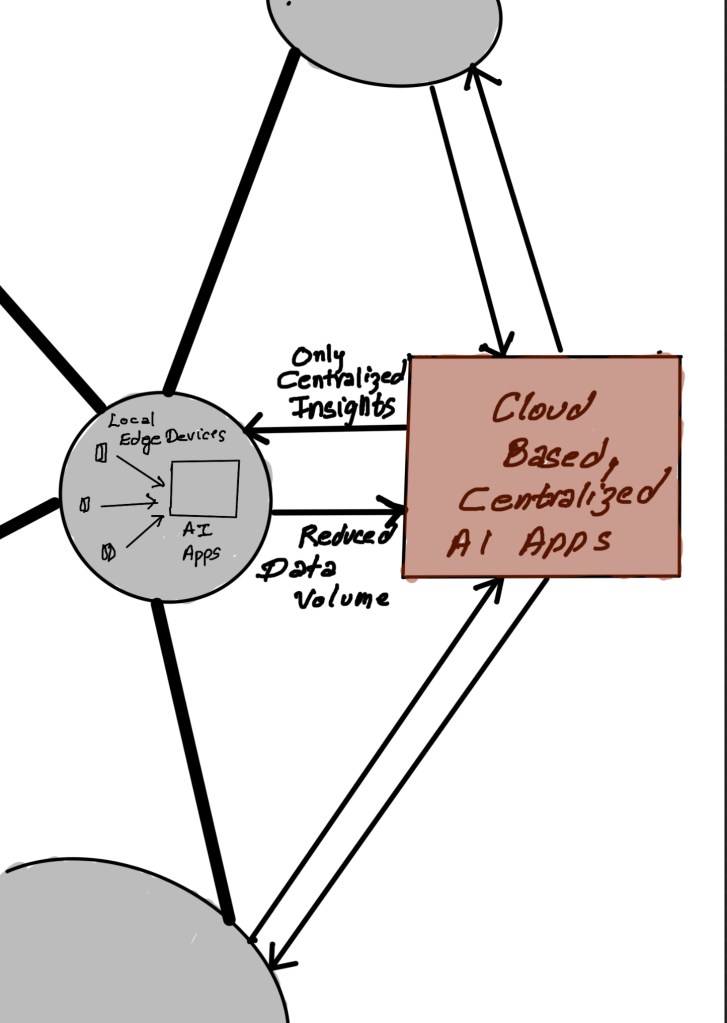

Unlike the Cloud AI approach, the exchange of data and decisions is less in magnitude (refer Figure 1 from the first part of the article). A simple representation of Edge AI in supply chain context will be like the one shown in Figure 2. Also, note that this article focuses on these frameworks from an analytics perspective. These architectures are also leveraged for application management and deployment.

Figure 2: A Simple Representation of Edge AI Flow

As you can assume, the intent is to push decisions to the edge. This is done by generating insights at the edge. So, essentially, this approach minimizes data capture, insights, and decision latency. And the efficiency aspect, as you can imagine, is that the volume of data that gets shared with the cloud hub is reduced significantly. A significant volume now gets consumed at the edge itself.

This framework allows us to capture and use insights in a way we have not done before. Edge AI forms the foundational building block of Industry 4.0 capabilities, where timeML can help process data on the edge (on manufacturing floors, for example) and generate almost immediate insights (like flagging anomalies). However the advantages extend beyond manufacturing and are also being leveraged in warehousing and logistics. There are opportunities in retail shop floor management and interpreting consumer behavior on the floor. This framework is genuinely transformative.

So far, Edge AI seems to be a perfect solution. If it is, why bother with Distributed AI? One thing that you have to keep in mind, as shown in Figure 2, is that the Edge in the framework is essentially a node in the supply chain. It is a decision-making entity (a plant or a warehouse).

To be a little more technical and pick terms from distributed cloud terminology, each edge, or node, has a container platform, with data and AI middleware deployed on it, with applications deployed at the node itself. Hence, it is not just about sensors capturing and transmitting data or TinyML deployed on smart edge devices. In the context of this framework, the node itself is the edge. And obviously, you have edges (like smart sensors and cameras) within this edge location.

This seems like a perfect solution to address latencies and allows the nodes to take control of analyzing and executing decisions and technical control of applications on the stack. So, what could be the drawback(s) that may lead to exploring Distributed AI? The third and final part of the article will cover the drawbacks and how Distributed AI can help. The third and final part will be published on 11/16.