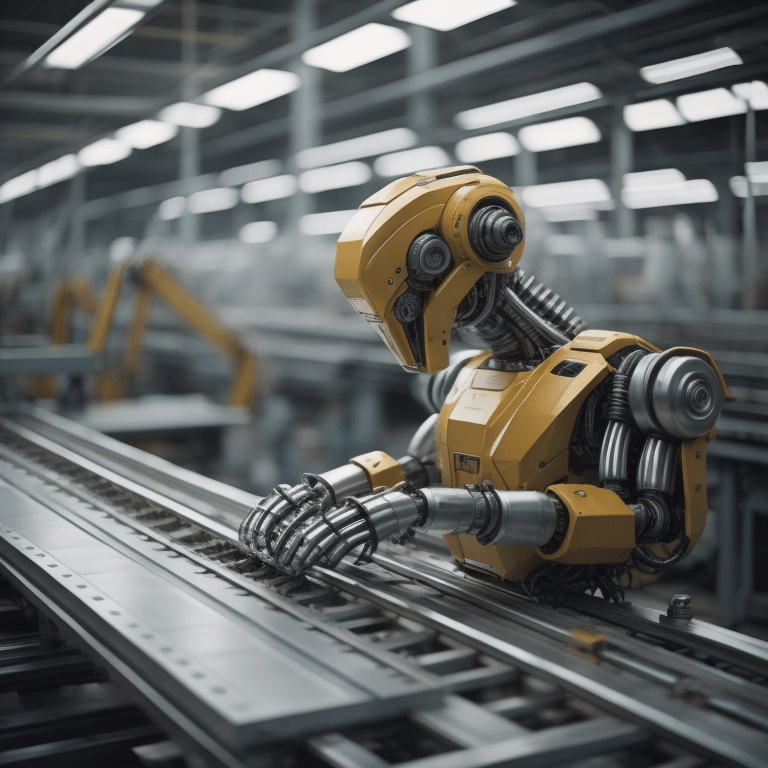

In their research paper, Real-Time Information-Driven Production Scheduling System, Zhang and Tao propose a real-time production-driven information system architecture, shown in Figure 1.

Figure 1: Overall architecture of a real-time production-driven information system

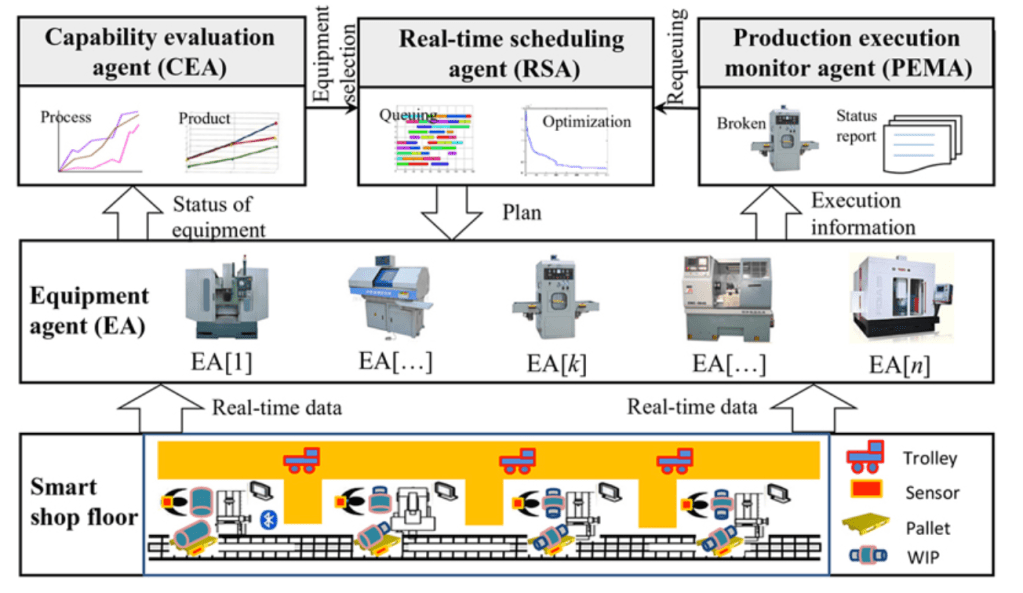

As you can assume from the architecture above, the layer that focuses on planning and execution is the top layer that comprises a capability evaluation agent, real-time scheduling agent, and production-execution monitor agent. The most critical component of the architecture is the RSA, which is tasked with planning. The proposed architecture of this component in the paper has been reproduced in Figure 2.

Figure 2: Components of real-time scheduling agent.

While the proposed overall architecture shown in Figure 1 can be improved in multiple ways, the architecture of the real-time scheduling agent in Figure 2 has certain real-world drawbacks. The primary one is leveraging the optimization approach, which will make real-time runs and execution unrealistic. Neural network-based models can alleviate this challenge.

Most manufacturing operations try to minimize setup time by sequencing the parts with other parts with standard geometry and tool requirements. This form of sequencing is inflexible, and though it reduces setup time, it is not satisfactory as far as responding to customer demand pattern fluctuations go. While many attempts have been made to minimize the cost or cycle time of Job shop manufacturing, those addressing the issue of setup waste while meeting customer on-time demand are rare.

Job Shop Scheduling (JSM) approach

JSM approaches are widely leveraged for shop scheduling, notably the Shortest Processing Time (SRT) heuristics.

SRT Heuristics: Assume that the part number with the lowest total setup time plus machining time is denoted as P1, the second lowest is P2, and so on. Then run the parts in the sequence P1<=P2…..<=Pn. This will result in the lowest average, or mean, delivery cycle time.

This heuristics also did not address the customer service issue. While this method may result in a lower mean cycle time, it may also result in part N in the sequence being produced last, even though it may be required first. However, you can run variations of SRT with additional constraints, where multiple interpretations of JSM are run.

The Neural Network Approach

Problem Statement

We need to find the sequence of producing parts that will replace the wasteful random setups, resulting in a 50-70% reduction of setup time while still meeting customer delivery dates.

Inputs

- List all jobs and part numbers at each Pull group, all of which must be shipped within 30 days.

- A matrix of setup times from any part number to any other part number.

- The setup time data on tools, chucks, etc., on the machine within 3 hours of finishing its machining task. The 3-hour deadline will allow us to respond to any new job.

Creating Training Data and Why Heuristics Won’t Work

Theoretically, the solution to this problem is similar to TSP heuristics. So, for example, there are four parts, A, B, C, and D. The logic considers these setup times as “distance between these cities” and hence can leverage the logic of “visiting several cities” in a sequence that minimizes the distance, subject to the customer demand requirement constraints.

Remember that there is no mathematical formula that solves this problem. An iterative approximation can be found using Branch and Bound (B&B). Since we assume a 3-hour deadline (in Inputs), B&B may be too time-consuming.

So to generate the training data that the NN model will see, we need to create several thousand B&B or TSP Heuristics solutions to unexpected problems ( can be done offline in the cloud using data from the ERP system).

Training the Neural Network

These solutions are then used to train the Neural Network. The training process will adjust the weight of each neuron such that its output on the four job numbers is the same as the offline cloud solution. Thus when presented with a similar set of jobs in the future, the Neural Network will calculate the answer in minutes. The trained Neural Network can see every possible sequence of thousands of parts and finds the one that minimizes total setup time based on its training using Branch and Bound examples.

Those of us who frequently solve optimization problems know that it takes much computing time to reach the objective function’s absolute maximum. Still, you can get to 95% very quickly; in most cases, this 95% is sufficient. The same is true of Neural Networks.

Validate through simulation

A final verification for each recommendation can be done through a simulation program. Most manufacturing operations generally have such models set up already, and running them through these simulations will make sure that Neural Network training is current.

The bridge between theory and Implementation

While theoretically, the logic seems straightforward, the natural world has challenges. And the central part of that challenge is not the Neural Network algorithm. The first challenge is to understand where you want to implement this capability. Some of the questions that you need to address here are:

(1) Where will the algorithm sit within the Manufacturing planning systems landscape? How will the information get consumed?

(2) Is the data consistency and integrity in place to support training such a model?

(3) Will the capability require to build such a model be developed internally, or will an external partner help you?

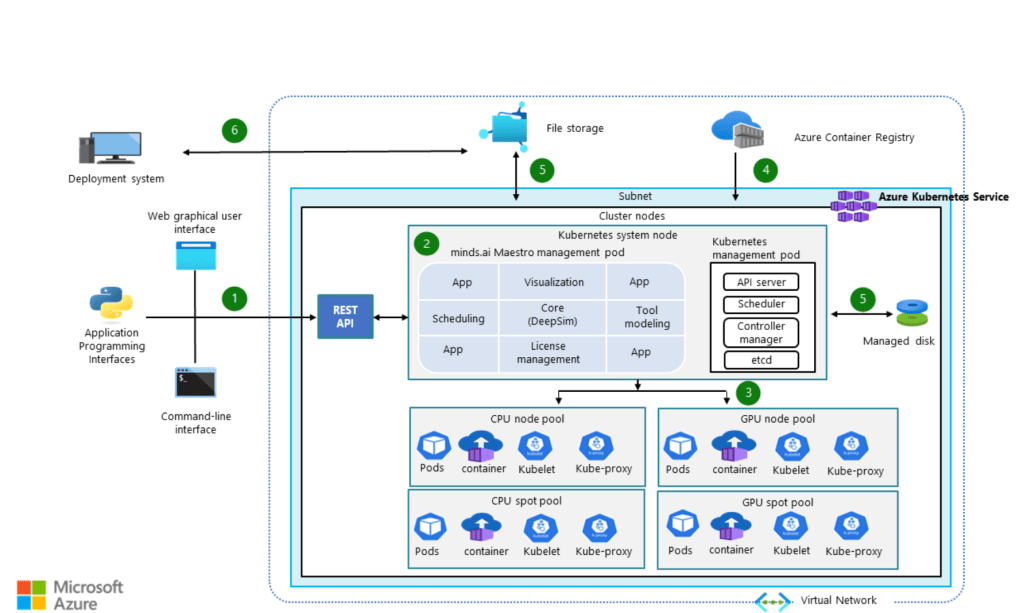

The good news is that from a technology availability and readiness perspective, there is nothing futuristic about this. The only ingredient that may be lacking is the willpower and courage to take that bold step and experiment. For example, look at an example architecture that leverages Microsoft Azure in Figure 3. You can build the capabilities discussed in the article by leveraging similar architecture and pairing it with appropriate algorithms.

Figure 3: Automated scheduling and dispatching for semiconductor manufacturing

In many industries, this real-time automated system may bring a much better process quality and efficiency. If you have invested in intelligent manufacturing capabilities and are leveraging cloud-based solutions, you have already done the foundational work. I strongly suggest you extract the maximum value from your investment by using that foundation to build differentiating capabilities.